Research Projects

QuantiPhy: a Quantitative Benchmark Evaluating Vision-Language Models’ Physical Reasoning Ability

Researcher, Supervised by Prof. Fei-fei Li and Prof. Ehsan Adeli

- Create a controlled physical video dataset that includes Blender simulations, real lab captures, and curated internet videos, each with known ground-truth physical quantities.

- Design quantitative estimation tasks that require answering physics-related questions (e.g., speed, acceleration, distance) directly from video.

- Evaluate both humans and VLMs on the same tasks to compare their accuracy and analyze how background complexity, motion type, and data source affect reasoning performance.

Real-Time Watermarking for VR Motion

Lead Researcher, Supervised by Prof. Dianna Xu and Prof. Aline Normoyle

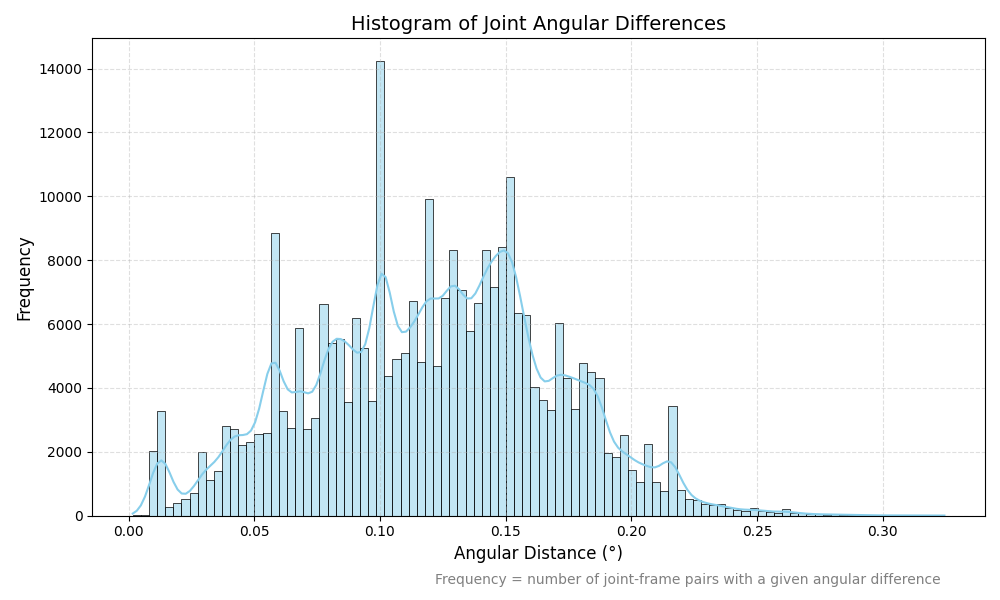

- Developed a real-time watermark embedding system using Quantization Index Modulation (QIM), optimized for VR motion data with angular velocity-based filtering.

- Simulated various real-world distortions, including frame rate resampling, partial frame loss, and motion blending, to test watermark robustness.

- Evaluated performance using both text and image watermarks, comparing accuracy, visual impact, and resilience under different attack types.

Digital Doppelgangers

Co-Researcher, Leaded by Prof. Aline Normoyle

- Developed realistic digital replicas, utilizing 3D scanning technologies to create lifelike avatars in Unreal Engine.

- Integrated advanced voice and gesture technologies, implementing voice cloning, speech recognition, and

gesture capture to ensure digital avatars closely mirrored real-world interactions, including gaze and blink

animations.

Immersive VR Theater

Co-Researcher, Leaded by Prof. Aline Normoyle

- Created an immersive theater experience by combining the Meta Quest 3 VR headset with realistic puppetry, using motion capture technologies to seamlessly synchronize physical and digital elements.

- Enhanced motion capture precision by optimizing reflective marker placement for stable camera visibility and refining camera calibration in Vicon Shogun.

- Implemented inverse kinematics in Unity to achieve realistic joint movements for a 1:1 Maya-modeled and rigged puppet based on motion capture data.

- Preparing to open an immersive theater experience to volunteer testers for evaluation and further refinement.

- Integrated advanced voice and gesture technologies, implementing voice cloning, speech recognition, and

gesture capture to ensure digital avatars closely mirrored real-world interactions, including gaze and blink

animations.

Simulation and Visualization of Reflective Surfaces

Co-Researcher, Supervised by Prof. Dianna Xu

Frances Velay Women’s Science Research Fellowship (2024)

- Created an island scene in Blender with various reflective objects for testing mirror reflection techniques.

- Implemented advanced reflection methods in OpenGL, including Cubemap, planar reflection, and SSR (Screen Space Reflection) on the island scene.

- Analyzed different methods for visual quality and computational efficiency on objects with different reflective sizes and surrounding complexity, using timing measurements and comparative analysis on visual effects.

- Created a new technique by applying different algorithms to different objects based on the analysis, which significantly improved visual realism with sacrifices on time cost.